Apr 30, 2025

Video Models: The Data Challenge

We're excited to share this piece, authored by Adithya Iyer, who has been exploring this topic as part of our research team at Morphic.

We're excited to share this piece, authored by Adithya Iyer, who has been exploring this topic as part of our research team at Morphic.

Building foundational video models used to be the domain of the compute-rich. But that’s no longer the case. With the growing number of high-quality open-source models and tools, creating and customizing video diffusion models is becoming more accessible than ever, much like the turning point Stable Diffusion created for image generation. To help capitalize on this moment, we want to share some learnings cobbled together from various sources, some from the open domain and some from first-hand experiences building video models at Morphic. This article is the first of a 3 part series that we hope will get you started on building and customizing video diffusion models.

Fundamentally, video generative AI can be tackled as 3 separate challenges.

- Challenge 1 – The Data Challenge:

Collecting and cleaning millions of video clips - so your model can learn the distribution of videos, and, post-training, generate them. - Challenge 2 – The System Challenge:

Once you have a working prototype, the next challenge is scaling training across many GPUs to take advantage of larger batch sizes. - Challenge 3 – The Model Architecture Challenge:

Deciding on which base architecture you want to use. Most of the large video models in existence are Diffusion Models (https://arxiv.org/abs/2006.11239) - so we will make this assumption. We also assume most model backbones are transformer-based, like DiTs (https://arxiv.org/abs/2212.09748).

Challenge 1: The Data Challenge

This is where things get really interesting — and critical. This can be hugely detrimental to the task you are trying to accomplish if not handled correctly. A model is fundamentally upper-bounded by the data it sees during training. This is more drastic with video models as video models tend to demonstrate difficulty understanding physics (link: https://arxiv.org/html/2411.02385v1). The final dish is only as good as your ingredients, and your model is only as good as the data you feed it. In this article, we will use a commonly available dataset, OpenVid, to give you an overview of how to pre-process your data for various stages of tuning.

Here we share some of our challenges with creating datasets and techniques to pre-process them. Some other great resources are listed at the bottom of the page. OpenSORA and CogVideoX are also useful resources, and this blog will highlight the strengths of different approaches.

Video Datasets: What Exists in Open Source

First up, the easiest way to grab some data for training is to look at what's publicly available. Some of the possible datasets you could use are:

- WebVid: It is large and contains mostly Shutterstock footage. But it comes with a VERY large amount of watermarks, making it unusable for training.

- PANDA has severe quality issues. Contains a lot of low-res videos and fast-moving clips.

- OpenVid is a subset of PANDA and contains better clips from it. It still contains mostly just VLOG videos, with fast-moving cameras and videos of talking humans. OpenVidHD might also be worth a look.

- Other specific small datasets for niche use cases like RealEstate10K, Human Annotation data (Sapiens), etc. Some of these smaller datasets may be biased towards certain lighting conditions, slow-motion shots - doing a random sampling and visually inspecting these datasets before adding them to the large data mix should be a necessary sanity check.

Some of these datasets have additional metadata that may be useful. e.g, RealEstate10K has camera pose estimation, which can be used to train for camera control.

There is a large corpus of video online, but note that a lot of these are not free to use. We recommend using videos that are copyright-free or videos that are available on the web.

What kind of clips do you really want to train a good video model?

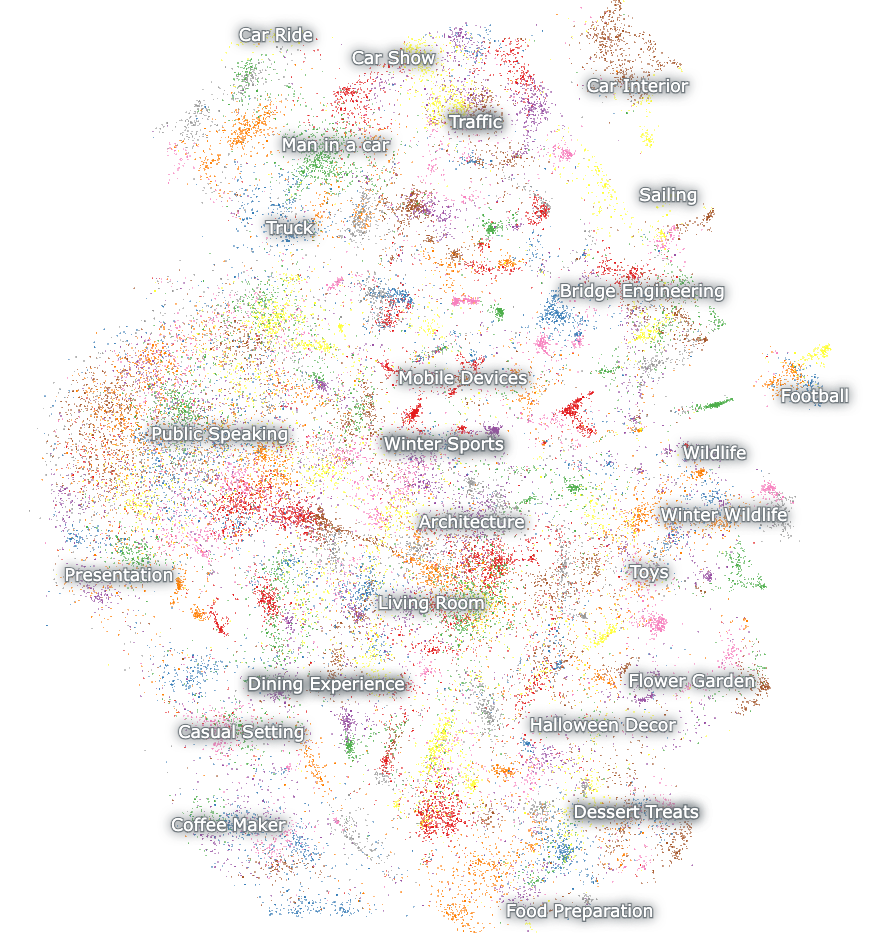

Video concept distribution is critical for achieving desired results when training a model. Data biases are very common, and open datasets rarely contain well-distributed data. The skew in concepts can be detrimental to your end result. A quick inspection using some open tools gives us an understanding of their content. We typically use Nomic Atlas to represent our data distribution.

Some popular categories are:

- Humans talking: A large part of publicly available clips are just this.

- Landscape shots: think zoom-out shots of mountains.

- Vehicles, Equipment, Product Reviews: A good chunk of HD clips are just these.

- News broadcasts with graphics: a big chunk of public data contains these; these might be useful if your application is lip-sync.

- PowerPoint presentation clips.

The data you want to learn from should ideally be similar to the end use case you imagine your model to have. For example, if you’re expecting your model to perform on sports clips, adding more samples of Sports Highlight might be your best bet. If your use case is human expression shots, having close-up clippings or podcasts will give you better alignment. The end use guides the data selection, and it usually has a greater impact on performance than model-architecture changes. It is beneficial to align your data distribution from the start.

Going from long-form video to short clips for training

Let's say you could acquire some raw data that contains the required clips within it. For example, a 40-minute documentary on Lions contains a 5-second clip of a herd drinking water, a perfectly reasonable training data clip, but how will you segment these clips at scale (think 10s of thousands of documentaries)?

Splitting into clips

We want to detect sudden camera changes as the first filter. Pyscene detection is the industry standard (used by Hunyuan, OpenSora, CogVideo, etc.), which uses the change in the illumination between frames as the cutoff. We've generally found it to be reliable, but it might miss the cutoff frames by 1 or 2 frames, and the thresholds vary depending on the data domain. Combining PySceneDetect with a trained classifier for content type will likely produce more robust results. Our recommendation would be to cut the long video into small clips and remove the first and last 2 frames for very consistent clips.

An alternative that can be used stand-alone or in addition to PySceneDetect is TransNetV2, a trained shot classifier that can be further fine-tuned on domain-specific scene cuts/transitions if needed.

You now have a large corpus of small clips, containing continuous shots of a scene with very few clips. The next job is to filter them.

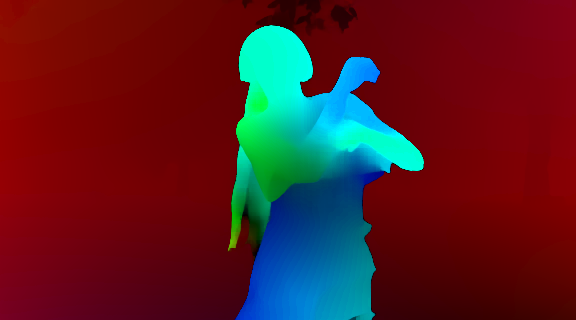

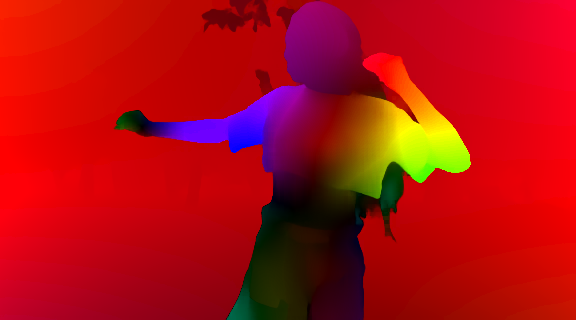

Looking at the optical flow

Here, red indicates low motion pixels, blue/green indicates greater motion.

Let's compare fast and slow optical flow videos. The first form of filter is to kick out very stationary clips. Our experiments and other literature show that training on stationary frames makes the model very quickly converge to a static motion model, and it’s crucial to only have clips with significant motion. You can use Unimatch or other optical flow libraries to get the flow between frames, and only retain clips with a higher total optical flow.

Based on our experimentation, 50-60% of the clips in natural videos get eliminated because of low optical flow.

Annotating to filter

Based on your use case, more complex filtering pipelines might be required. For example, if you are looking to train a model specifically good at live-action videos, you might want to filter videos that contain live-action frames. The easiest way to do this is to caption the video and filter whatever you want. The nuances involved with captioning, which we learn from our experiences, are:

- Use Video annotators for motion.

- Llava-Video, PLLAVA, AuroraFlow, or Gemini Flash 1.5 are possible VLM candidates that can annotate videos directly.

- These models are very good at understanding the characters, scene layout, backgrounds, and overall aesthetics of the video, and their outputs are trustworthy for these cases.

- A good way is to get a video annotation and then get a JSON output (for later filtering - for instance, you could have a field “isAnime” = True/False). Directly asking the VLM for JSON outputs makes the annotation worse (https://arxiv.org/pdf/2408.02442). And the best way to get structured output is to get a raw text output from the VLM and then use a smaller LLM (GPTo-mini, haiku) to summarise the annotations into JSON.

- Our observation is that this process has a critical flaw: the VLMs don’t understand motion. They can identify characters really well, but struggle with describing even the simplest motions (like right hand raised). Some manual re-annotation might be the way to fix these.

- Use images as video frames.

- A simpler option is to grab the middle frame from a video and ask the VLMs to annotate it. This helps you to get a rough list of all the objects in the video, the overall lighting, the camera angle, the type of shot, and other frame-level details.

- You can also use the frame annotation to filter out frames with text, segment clips into anime/live-action/vehicles, etc.

- Aesthetics, Dark Background - If you’re building a pure text-to-video model, filtering on aesthetic videos would be favorable. You can use an aesthetic score metric to get videos with a high aesthetic score.

Negating camera movement

Camera angles and movement are critical to any video filtering pipeline. Text-to-video prompts usually contain some form of camera requirement (zoom out, pan left). Using the video annotation is subpar - the VLMs correctly identified the camera movement only 10% of the time in our experiments. For early model training, still camera movement is a must.

We suggest getting camera motions analytically, a camera position movement is identified by the relative movement of the foreground and the background. The way to identify this is to use the following 2-step process:

- Segment out the subject and the background from the videos (https://huggingface.co/briaai/RMBG-2.0). This will isolate the core subject of the video.

- Track how the foreground and the background move by using a model like CoTracker.

This provides you with a trajectory map of the foreground and the background, which you can use to get a camera movement estimate. For example, to segment out still camera shots (and eliminate videos like vlogs), segment out all the videos that have a moving background.

High-res fine-tuning

Most models undergo post-training on high-resolution videos, with large movement scores. The best way to get these is to look at acquiring stock footage videos, which generally tend to be high-res, have existing motion, and have nearly smooth camera motion. Recent work like Step Video has seen significant improvement in quality after adding high-resolution fine-tuning.

The issues with the modern DIT-like pipelines and closing thoughts for the future

The biggest issue with modern Text2Image and Image2Video models is twofold:

- Over-correcting for aesthetic quality: This particularly shows in the Image to Video models, which seem to take any image and turn the video into a cinematic shot, oftentimes deviating conceptually from the original image too much. Models fail to ground the result in reality and the laws of physics and settle into an aesthetic minima. To build a truly world-understanding model, you need to optimize for a world that is far from perfect.

- Under-optimization on motion: Most of the existing video models excel in slow camera-moving still shots and have little prompt following ability for specific motions. For example, try asking any of the leading video models to “raise a character's right hand to their nose”.

We hope to fix these and build the most controllable video model in the world at Morphic. If you believe in the same, come say hi.

Most DiT-based models are used to create small 5-10 second clips for product placement, instructional videos, and promotions. They’re usually slow-motion shots, high resolution, and contain high aesthetic quality. Video models have not seen wide adoption in feature-length productions. You won’t see generative content being used in the making of Game of Thrones (yet), or being used to generate a vlog. But this is changing, and as those changes manifest, the data processes mentioned here will deprecate. We will keep updating this blog as we see new trends emerge and better pre- and post-processing strategies emerge in video models.